Transparent AI: Using Distributed Ledgers to Audit Models and Data

How blockchain can make AI accountable, from model training to data provenance.

TL;DR

AI is only as trustworthy as its data, and that data still lives in the dark.

Blockchain can change that by recording how models are trained, what datasets shaped them, and who changed what along the way.

The goal isn’t to slow AI down, it’s to make it verifiable.

Here’s how distributed ledgers are being used to track data provenance, audit AI models, and build transparency into the algorithms shaping our future.

The Trust Problem in AI

Every AI company sells the same promise: trust us, the model works.

Ask how it works, what data trained it, who labeled it, and the answers blur. Even engineers admit some models behave like black boxes: accurate, powerful, opaque.

In IBM’s Global AI Adoption Index 2023 (PDF), 83 percent of IT professionals at enterprises exploring or deploying AI say that explainability is important to their business, proof that trust, not just performance, has become central to adoption.

IBM’s accompanying AI in Action 2024 report notes that 42 percent of large enterprises already use AI in production, yet ethical and trust concerns (23 %) remain among the top deployment barriers.

Even inside these organizations, model decisions are often inscrutable.

We don’t let banks skip audits.

We don’t let pharmaceutical firms hide lab records.

So why let AI systems, which now decide jobs, credit, and justice, run without verifiable trails?

Opacity isn’t just a technical issue; it’s structural.

Training data is guarded as intellectual property, regulators can’t keep up, and users get results without receipts.

That’s where blockchain becomes useful, not as hype, but as infrastructure.

A ledger doesn’t just record value; it records truth with timestamps.

What if that same mechanism logged model training steps, dataset versions, and access changes?

It could turn AI’s black boxes into glass boxes, systems whose logic can be traced without exposing sensitive data.

Instead of hoping an AI is “fair,” we could verify its provenance.

Projects such as IBM’s Hyperledger Fabric, Ocean Protocol, and Fetch.ai are already experimenting with auditable model registries.

Even the EU AI Act now mandates record-keeping and logging for high-risk systems, and blockchain is emerging as a compliance backbone.

AI doesn’t need to be perfectly explainable, just provably honest.

Why Transparency Matters More Than Accuracy

AI’s scoreboard has always been accurate.

But accuracy doesn’t equal trust, not when the reasoning is hidden.

A hiring algorithm might rank one résumé higher due to phrasing quirks. “Ninety-percent accuracy” means little if we can’t explain why. When bias appears, there’s no audit trail.

Transparency is the missing layer of accountability, the difference between it works and we can prove it works.

AI systems rely on vast, untracked data: scraped web text, licensed corpora, synthetic augmentations. Once folded into training, those sources vanish from view. Even careful teams struggle to trace lineage after deployment.

That’s why provenance, the ability to prove data origin and transformation, now defines AI governance.

According to Deloitte’s 2024 AI Governance survey, only 25 percent of corporate leaders feel highly prepared to manage AI governance or auditability, a gap regulators are moving to close.

Blockchain offers an elegant fix.

Instead of mutable internal logs, ledgers create immutable checkpoints.

Each dataset, model version, or fine-tuning step can be hashed and time-stamped. You don’t see the data itself, but you can verify nothing’s been altered.

It shifts trust from companies to cryptographic proof.

Bias can be corrected once visible; opacity hides it forever.

The Blockchain Fix: Turning Black Boxes into Glass Boxes

So how does this actually work?

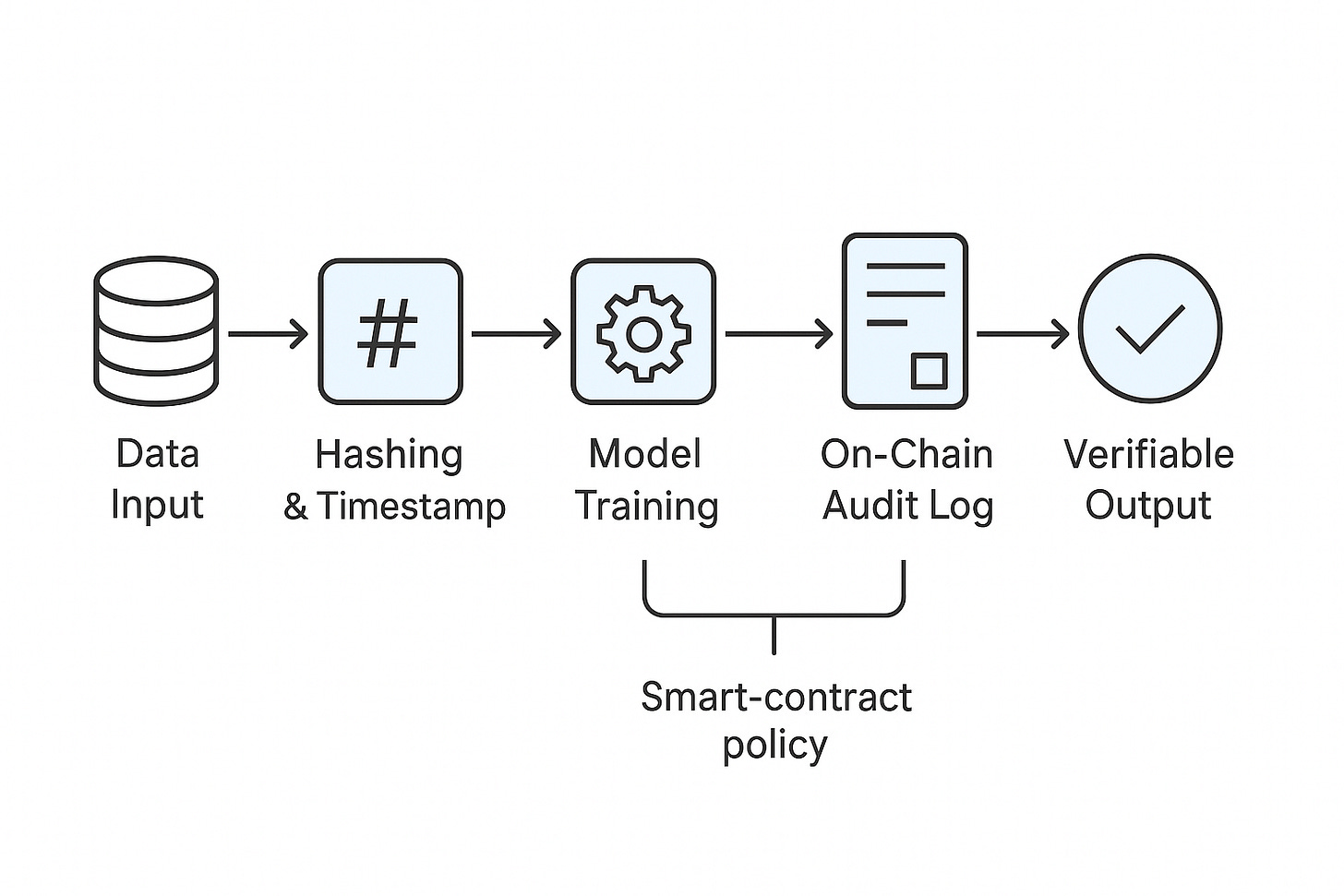

Think of blockchain as the ledger of record for AI’s entire life cycle.

Each stage of the workflow leaves a verifiable fingerprint:

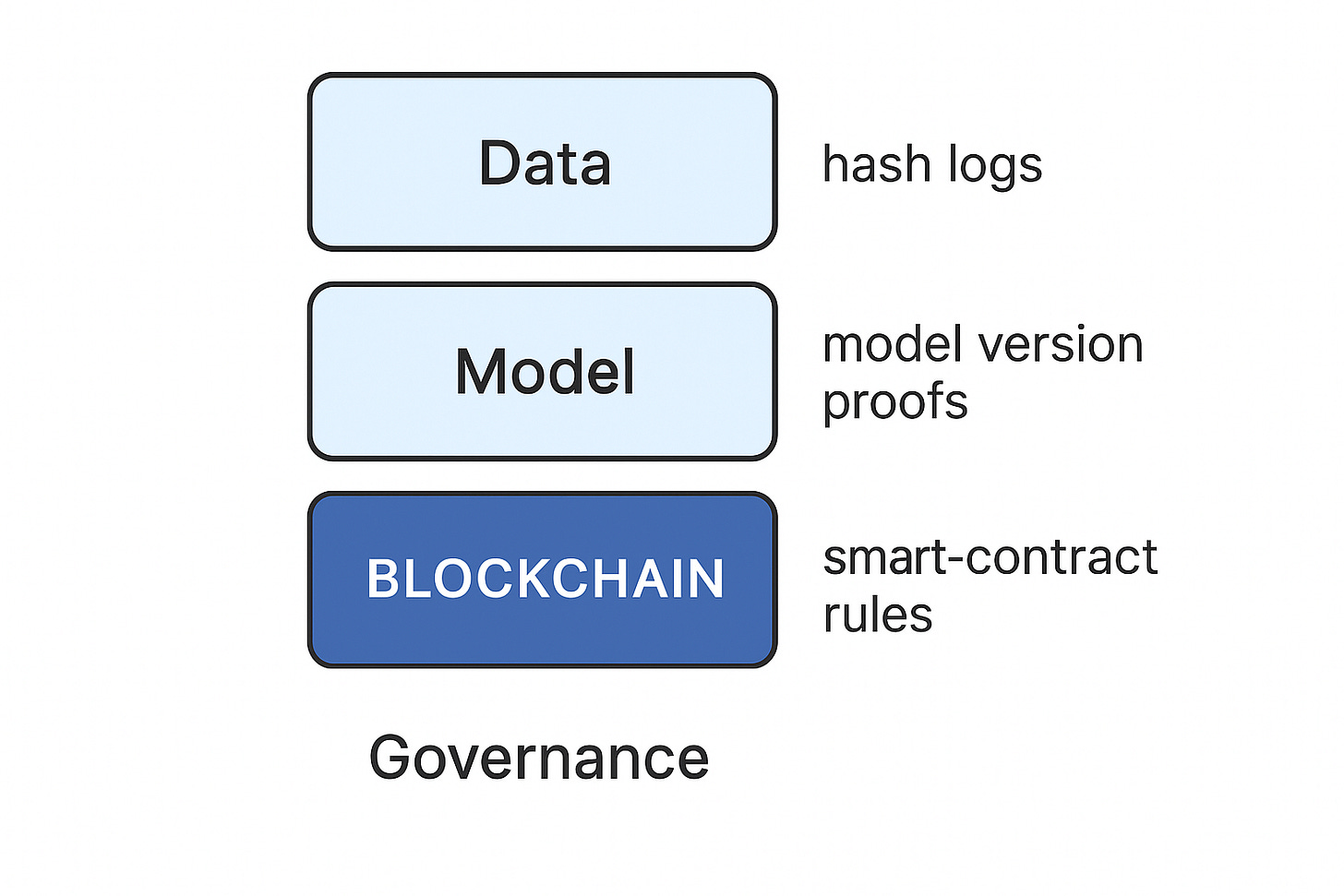

Data layer: Every dataset is hashed, creating a cryptographic proof of what version was used for training. Even if stored off-chain (IPFS, Arweave, or enterprise servers), the hash acts as a permanent reference.

Model layer: Each training or fine-tuning run produces a new hash linked to its dataset. Auditors can confirm lineage without needing the data itself.

Governance layer: Smart contracts define who can access, modify, or monetize the model, turning opaque API calls into transparent, rule-based interactions.

Developers store proofs, not payloads.

The ledger anchors integrity while the heavy data stays off-chain.

This mirrors financial auditing: we don’t print every transaction; we verify that ledger entries line up. Similarly, auditors can confirm that an AI system used approved data, trained under proper conditions, and hasn’t been silently retrained.

With this setup, regulators and enterprises gain a shared source of truth, a decentralized audit trail for algorithms.

Live Experiments: Who’s Actually Doing This

This isn’t just theory. The bridge between AI and blockchain is already being built, quietly, and mostly by enterprise teams who care about audit trails more than hype cycles.

IBM & Hyperledger Fabric: IBM has long supported Fabric, which some pilots use to track data lineage and model updates inside regulated industries.

Ocean Protocol: A marketplace where datasets and models are tokenized, each carrying metadata and on-chain records for verifiable provenance.

Fetch.ai: Builds decentralized AI agents that transact autonomously, logging operations on-chain for transparency.

EU AI Act: Requires technical documentation, event logging, and risk management for high-risk systems, effectively demanding auditable data lineage.

It’s early, but directionally clear: the smartest AI firms are preparing not just for intelligence, but for inspection.

How It Works in Practice

Transparent AI follows a three-layer architecture, each anchored by blockchain for accountability:

Data layer: Datasets are hashed and logged on initial use, creating immutable references.

Model layer: Each training run generates a new version hash; model cards can link directly to these proofs.

Governance layer: Smart contracts manage access and rights. Instead of trusting an API key, you trust programmable rules recorded on-chain.

The goal isn’t to publish trade secrets, but to record integrity, the way accounting systems log transactions without exposing every line item.

If you’re building in this space:

Developers: store proofs, not payloads; use IPFS or Arweave for artifacts.

Enterprises: treat blockchain as your compliance ledger, not your marketing tool.

Investors: watch for startups building model registries or audit APIs — that’s where the real infrastructure is forming.

🖼 Figure 4: AI Transparency Stack — From Raw Data to Smart Contracts

Screenshot idea: flowchart: Raw Data → Hash Record (on-chain) → Model Version Proof → Smart Contract Access Control → Output.

Tool tip: use Lucidchart; emphasize “proof trail” and “off-chain storage.”

Challenges: What’s Still Broken

Transparency sounds simple, until you implement it.

Scalability: training logs can hit terabytes. On-chain proofs scale; full logs don’t.

Privacy: even hashed data can leak metadata or enable linkage attacks. Balancing openness and confidentiality is still unsolved.

Adoption: companies guard AI pipelines as proprietary territory. Until incentives align, transparency risks becoming a compliance checkbox.

Complexity: merging ledgers with ML workflows demands rare cross-domain expertise. It’s easier to say “trustworthy AI” than to build verifiable systems.

Still, the direction is inevitable.

As regulation tightens and public trust erodes, proof will replace promise.

Accuracy impresses, but accountability scales.

2026 Outlook: Toward Verifiable AI

The next wave of AI progress won’t be about larger models, it’ll be about auditable ones.

By 2026, expect regulators and enterprises to treat transparency as infrastructure. The EU AI Act sets precedent, and others will follow. Financial firms are already prototyping “AI audit trails” that log every dataset approval and model update like transaction ledgers.

New startups are emerging at this intersection, data-provenance networks, AI registries, and governance protocols linked to verifiable credentials.

Think of it as the accounting software layer for machine intelligence.

AI models may stay closed-source, but their footprints won’t.

We’ll move from explainable AI to verifiable AI, systems whose honesty can be checked without revealing inner code.

That’s where trust will live next: not in promises, but in proofs.

Personal Note

My first brush with “AI transparency” wasn’t in a lab, it was Spotify’s recommendation feed. One week it shifted completely, favoring songs I hadn’t played in years. Somewhere, an unseen model decided who I was.

That moment made something click: we’re all being profiled by invisible logic.

Blockchain can’t fix bias overnight, but it can give us a record of what happened and when, a starting point for accountability.

Because in the end, transparency isn’t about control.

It’s about consent.

Stay ahead in the AI revolution. Subscribe to get more insights on how blockchain and transparent systems are reshaping the way we build and trust AI.