AI + Smart Contracts: Safer, Smarter Solidity Workflows

How artificial intelligence is quietly reshaping Solidity development, auditing, and on-chain security.

TL;DR:

AI helps Solidity developers write, test, and audit contracts faster and safer.

Key uses:

Generate boilerplate code (Copilot, ChainGPT).

Detect vulnerabilities like reentrancy, overflows, and logic errors.

Simulate attacks and predict risky patterns from past hacks.

AI is a force multiplier, not a replacement—human review is still essential.

Future: adaptive contracts, AI-assisted governance, and smarter, self-healing workflows.

Can AI Catch a Reentrancy Bug Before You Do?

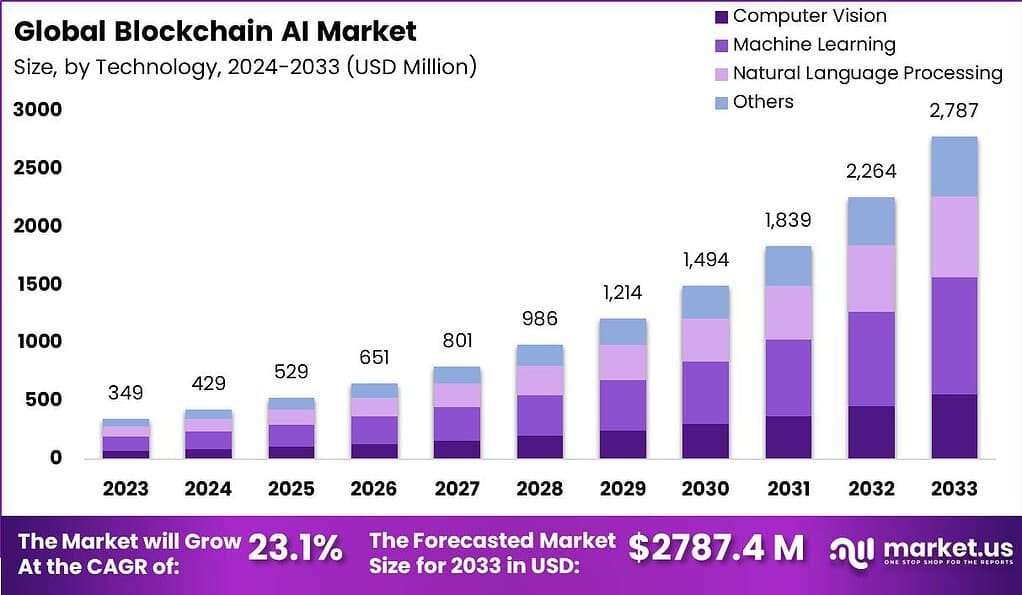

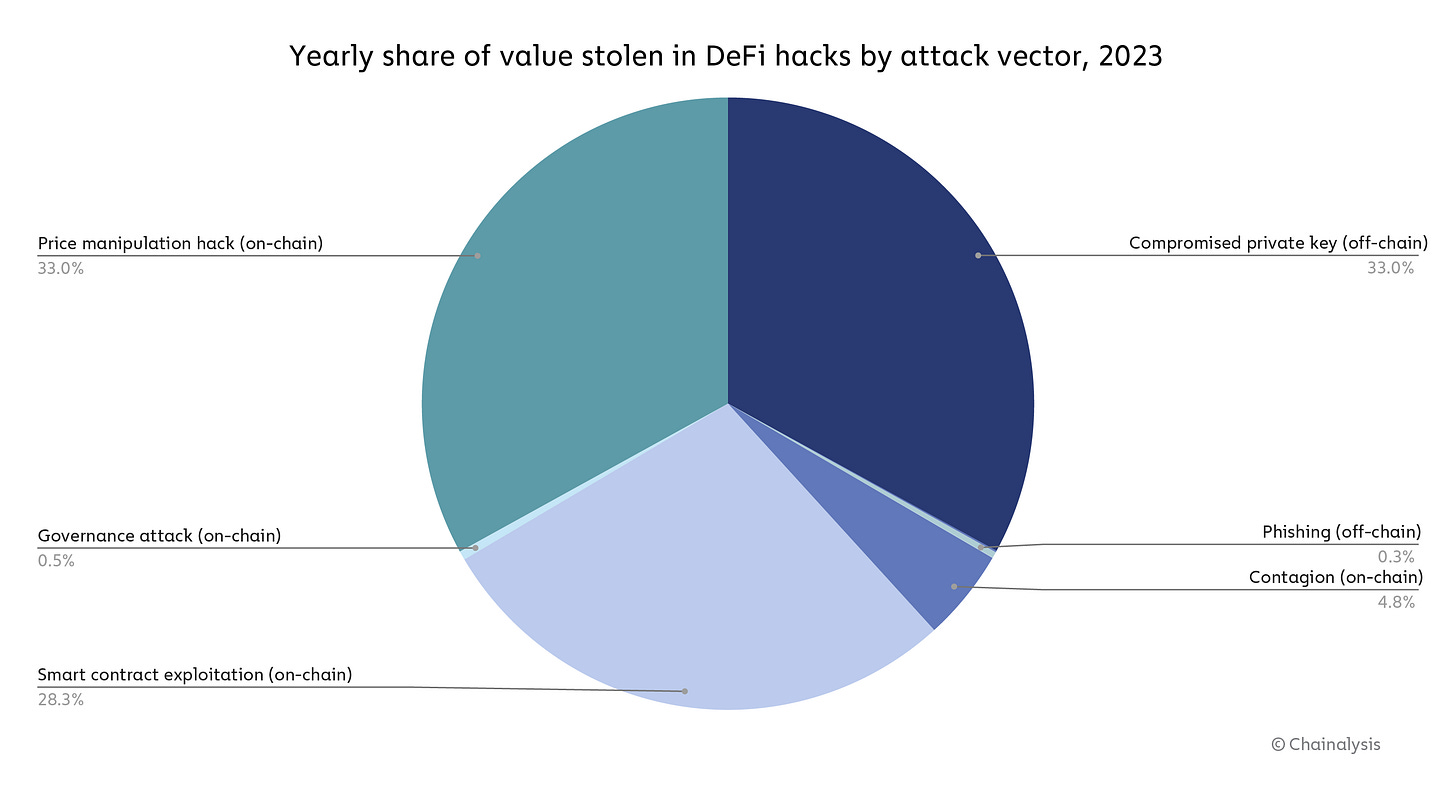

In 2024, more than $2.36 billion was stolen via on-chain exploits. Smart contract vulnerabilities alone accounted for roughly 14 % of that, per Hacken’s Web3 security report.

One missed require() check, one unchecked external call, one gas-optimized line gone wrong, and suddenly, “immutable code” isn’t your friend anymore.

Every Solidity developer knows that familiar dread: pushing code to mainnet and praying the audit was good enough.

But what if the audit itself could learn, from every past exploit, every reentrancy loop, every honeypot that slipped through?

That’s where AI steps in.

Not buzzword AI, but line-of-code copilots and anomaly models that flag potential exploits before deployment.

The question is, can they make smart contracts safer, or are we just moving the bugs one layer up, into the model itself?

The Bottleneck: Too Much Code, Too Few Eyes

Blockchain’s biggest security flaw isn’t Solidity, it’s scale.

Thousands of smart contracts go live every month, but the world has only a few hundred professional auditors.

Each audit costs $50K to $100K and takes weeks to complete. Most protocols can’t afford that pace, so they push updates fast, and pray later.

Human fatigue has a price.

The Euler Finance and KyberSwap exploits both slipped through manual reviews because even the best auditors can’t predict every edge case.

This is where AI steps in, not as a replacement, but as a force multiplier.

AI models can scan thousands of contract variations in minutes, test for known exploits like reentrancy or overflow, and flag anything suspicious for human review.

The result? A hybrid workflow that’s already changing how Solidity code is written, tested, and deployed.

The AI Advantage: What It Actually Does

Forget the headlines claiming “AI can write full smart contracts.” That’s marketing.

What’s actually working is augmented development, human + machine.

Here’s what that looks like in practice:

1. Code Generation

Tools like GitHub Copilot, ChainGPT, and Replit Ghostwriter now generate Solidity boilerplate from natural language prompts.

Ask for “an ERC-721 with mint limits and royalties,” and you’ll get 90% of a usable contract, complete with standard library imports and modifiers.

It’s not perfect, but it’s fast.

The real power isn’t in replacing developers, but in removing the tedium of rewriting the same OpenZeppelin base patterns over and over.

2. Auditing and Bug Detection

This is where AI shines.

Models like CertiK Skynet AI and Trail of Bits’ ML-assisted fuzzers analyze code patterns across thousands of known exploits.

They’re now capable of detecting:

Reentrancy vulnerabilities (like the ones that drained The DAO)

Integer overflow/underflow in arithmetic functions

Access control flaws — functions not restricted to owners

Logic errors that open unintended execution paths

3. Testing & Simulation

AI fuzzers simulate on-chain conditions: front-running, flash loan attacks, gas spikes, even malicious oracles.

These tests help devs understand how their contracts might behave in the wild, not just in the sandbox.

For example, an AI simulator might generate hundreds of “attack sequences,” revealing that a seemingly safe function can be spammed with zero-value transactions to trigger denial-of-service loops.

4. Predictive Defense

Perhaps the most promising trend: predictive vulnerability analysis.

By studying thousands of historic hacks, AI models now identify structural similarities between new contracts and previously exploited ones.

In plain terms: the model has seen this movie before, and knows how it ends.

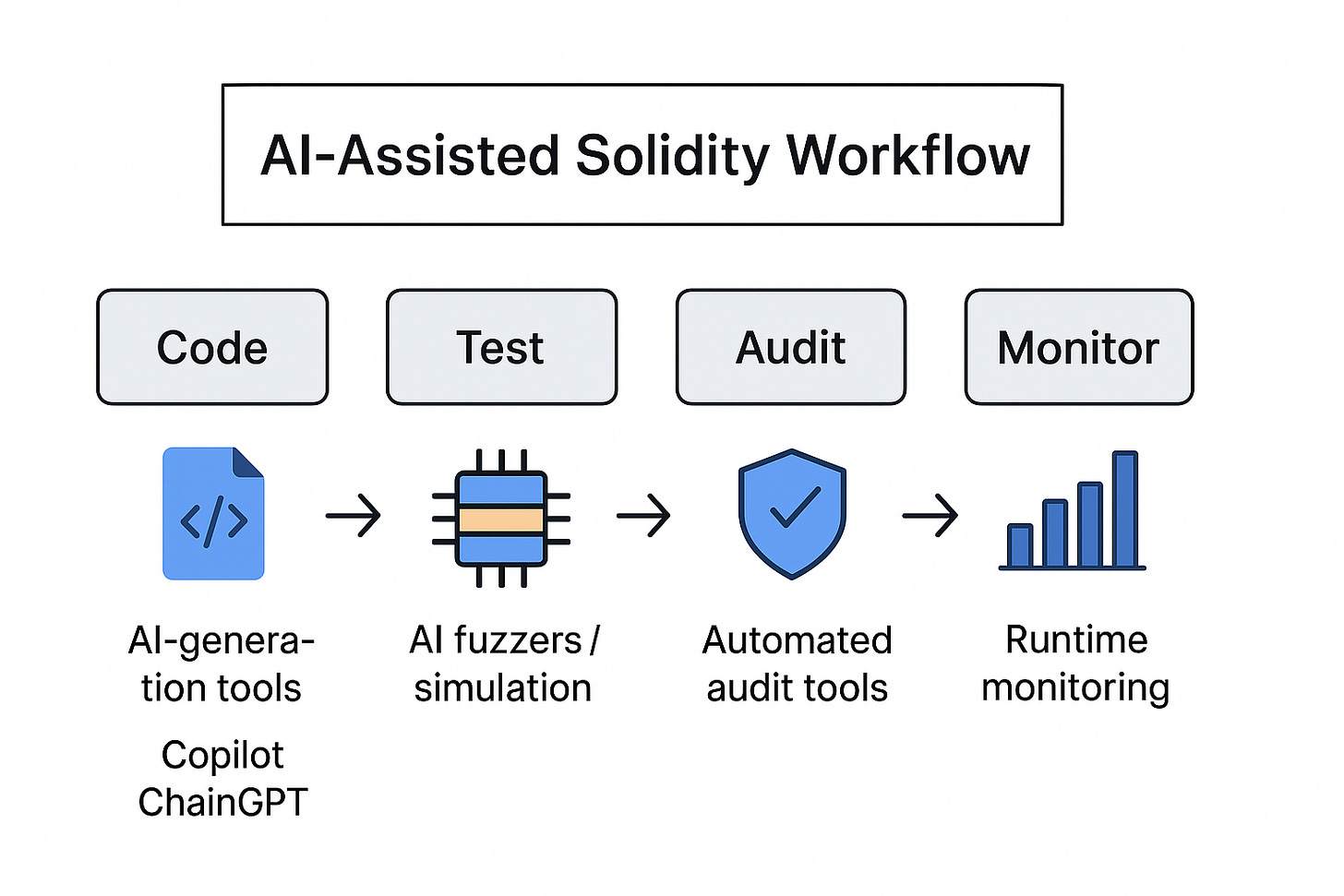

The Workflow: From Code to Mainnet

AI isn’t replacing developers, it’s quietly integrating into every stage of the workflow:

Write: Copilot drafts ERC-20 logic.

Test: AI fuzzers auto-generate attack vectors.

Audit: OpenZeppelin Defender’s AI module flags risk modifiers and missing checks.

Deploy: CertiK Skynet AI monitors live transactions for anomalies.

It’s a closed loop, one where every commit feeds back into a machine that learns, flags, and refines continuously.

When I tested this workflow myself, I saved about an hour of coding time, but spent three verifying the AI’s output.

It wasn’t plug-and-play.

Yet that forced paranoia was the point. It made me think harder about every assumption.

Case Studies: Who’s Doing It Right

CertiK Skynet AI

Monitors 2,000+ live contracts, analyzing millions of transactions daily. It flags unusual behavior before exploits occur, like sudden token mint spikes or owner role changes.

OpenZeppelin Defender + AI

Used by Aave and Synthetix, Defender now includes predictive checks for governance proposals, catching potential execution risks before votes finalize.

Avalanche Foundation’s AI Fund

Launched a $50M program for adaptive contracts that can modify their logic in response to market conditions, early steps toward self-healing smart contracts.

ChainGPT

Provides generation, debugging, and auditing within one interface. Devs can prompt, deploy, and verify contracts end-to-end without leaving the platform.

Limitations: Where AI Still Breaks Down

Let’s not romanticize this. AI has major blind spots, especially around context and intent.

Here’s where it fails most often:

Context Blindness: It flags “vulnerabilities” in economic logic that’s actually intentional (like oracle delay buffers).

False Positives: Over-alerting creates “audit fatigue.”

Data Leakage: Some AI audit tools use shared datasets, exposing private code in training loops.

Overreliance: Teams skip human reviews assuming “AI already checked it.”

In a recent Code4rena benchmark, AI caught 62% of known vulnerabilities. Human auditors caught 92%.

It’s a strong assist, not a substitute.

As one veteran auditor told me:

“AI will never replace paranoia. It just makes it scalable.”

What Comes Next: Smarter, Adaptive Contracts

AI’s next evolution isn’t about audits, it’s about autonomy.

Agentic Contracts:

Ava Labs is experimenting with smart contracts that can self-adjust parameters (like slippage tolerance or gas limits) based on on-chain signals.

ZK + AI:

Zero-knowledge AI models can reason privately, allowing contracts to process encrypted user data without revealing it.

AI Governance:

Some DAOs are piloting systems where AI pre-screens governance proposals for exploit potential before voting begins.

Within a year, “AI-audited” could be as normal as “KYC verified.”

Let’s see where the community really stands, are we automating trust, or just outsourcing it?

The Human-in-the-Loop Era

The future isn’t AI vs. auditors, it’s AI with auditors.

Developers who learn to prompt, verify, and challenge AI suggestions will gain a massive edge.

The workflow is evolving into co-authorship: contracts built by humans, refined by machines, and verified by both.

We’re entering a phase where “smart contracts” will finally live up to their name, autonomous yet accountable.

The question isn’t whether AI can audit your code. It’s whether you can still audit the AI.

Subscribe for guides on smart contract security, AI integrations, and crypto startup trends.

Get insights and portfolio updates before they hit the headlines.